DevLog @ 2025.03.20

Hello again! It has been 10 days since the last post of DevLog.

We made a lot of improvements to our user interface, we made it possible to integrate more LLM providers, and speech providers, first time to post Airi on Discord, bilibili, and many other social media platforms.

There is so much more that we can’t wait to tell you about.

Dejavu

Let’s rewind the time a little bit!

Ahh, don’t worry, our beloved Airi will not turn into GEL-NANA like this. BUT, if you haven’t watched the Steins;Gate anime series, try it~!

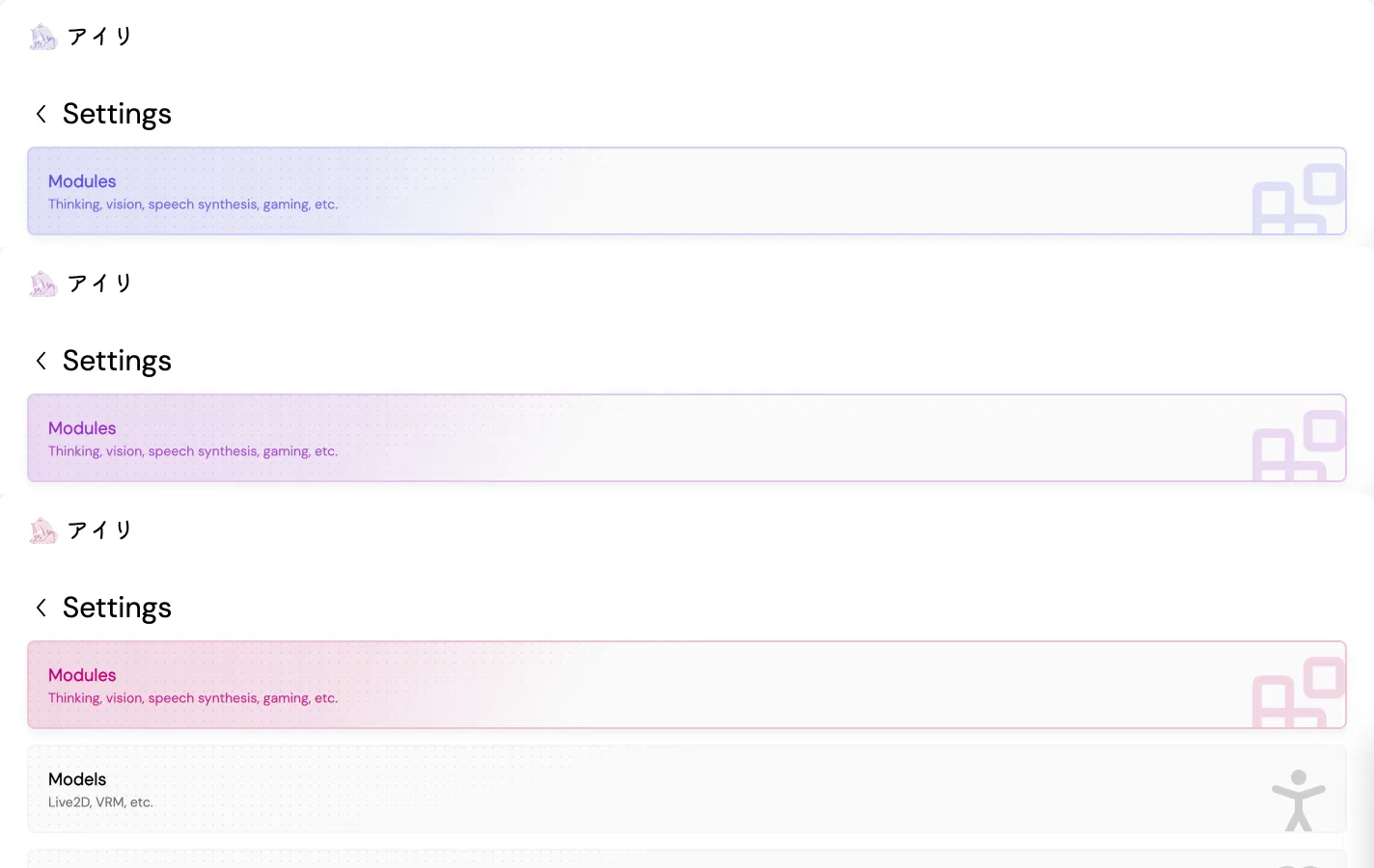

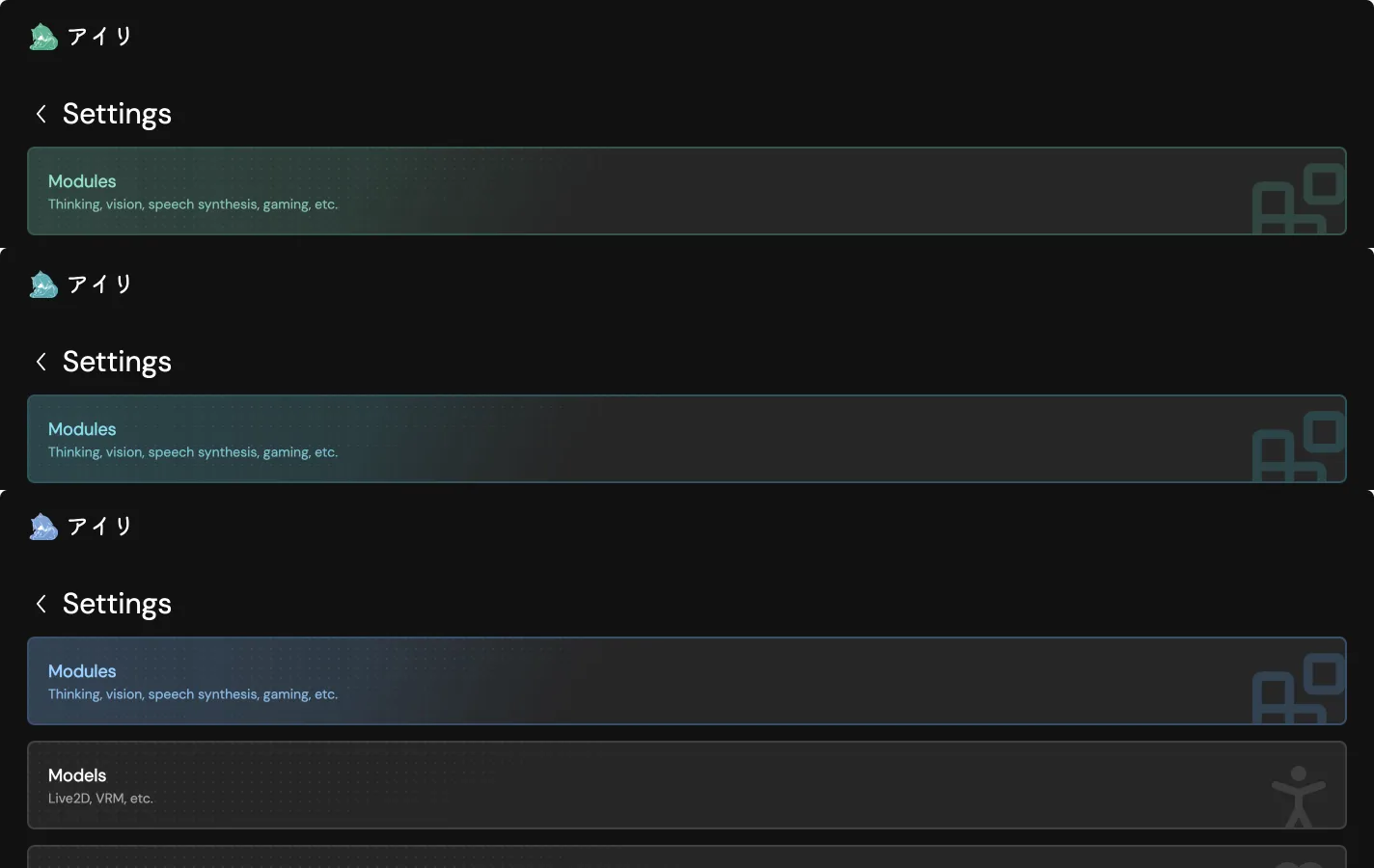

We were working on the initial settings UI design, and animation has been improved, customizable theme coloring has been achieved for 10 days ago. It was indeed a busy week for any of us (especially we are all part-time on this project, haha, join us if you will. 🥺 (PLEASED FACE)).

And this was the final result we got at that time:

0.571024

Welcome to the β sekaisen.

Since we have the colored card for model radio group, and navigation items, with the customizable themes, it’s obvious that we will definitely suffer in debugging the UI components within the business workflows, clearly it will slow us down.

This is where we made the decision to introduce the amazing tool called

Histoire, basically a

Storybook but much more native to

Vite and Vue.js combination.

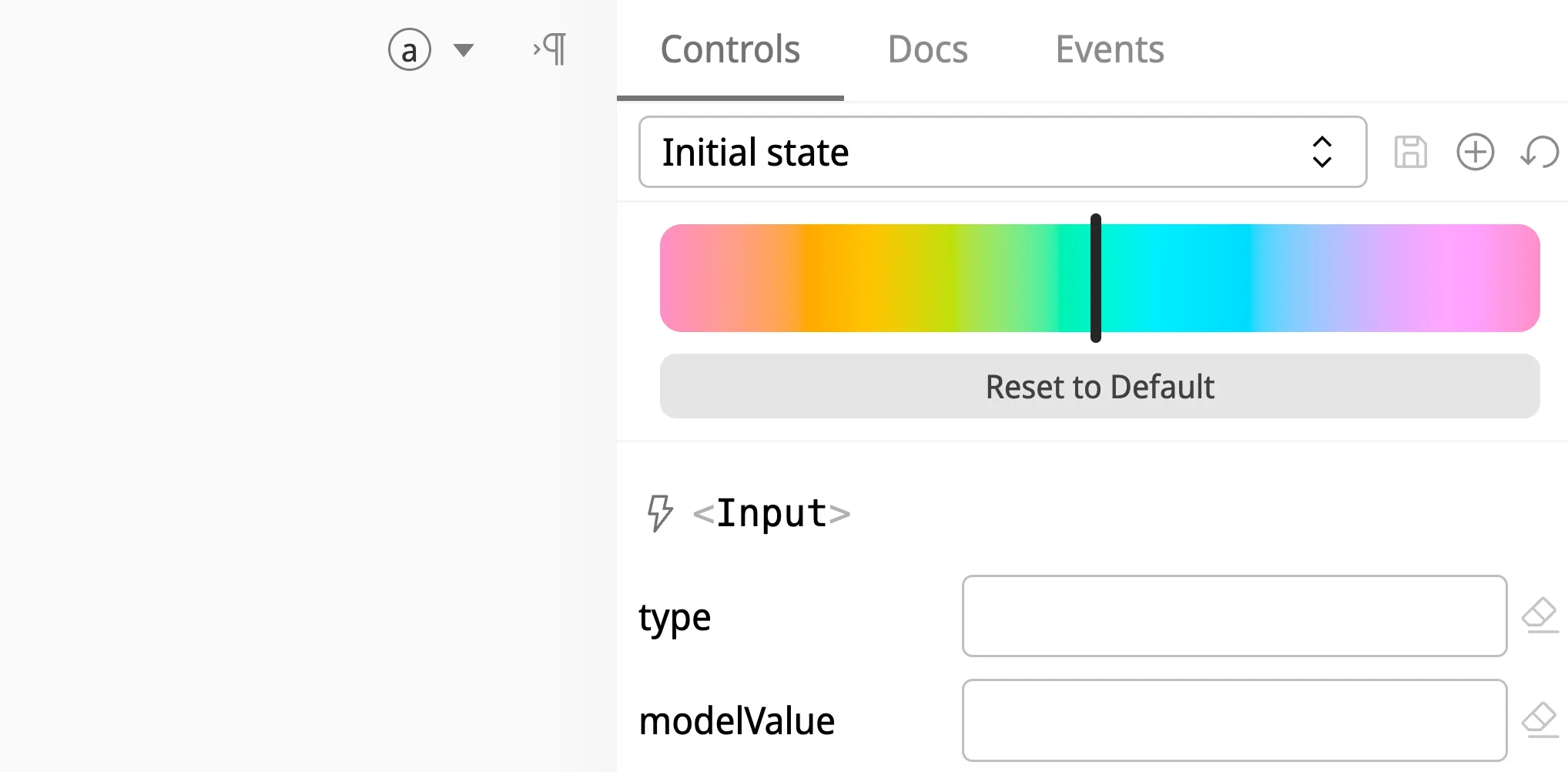

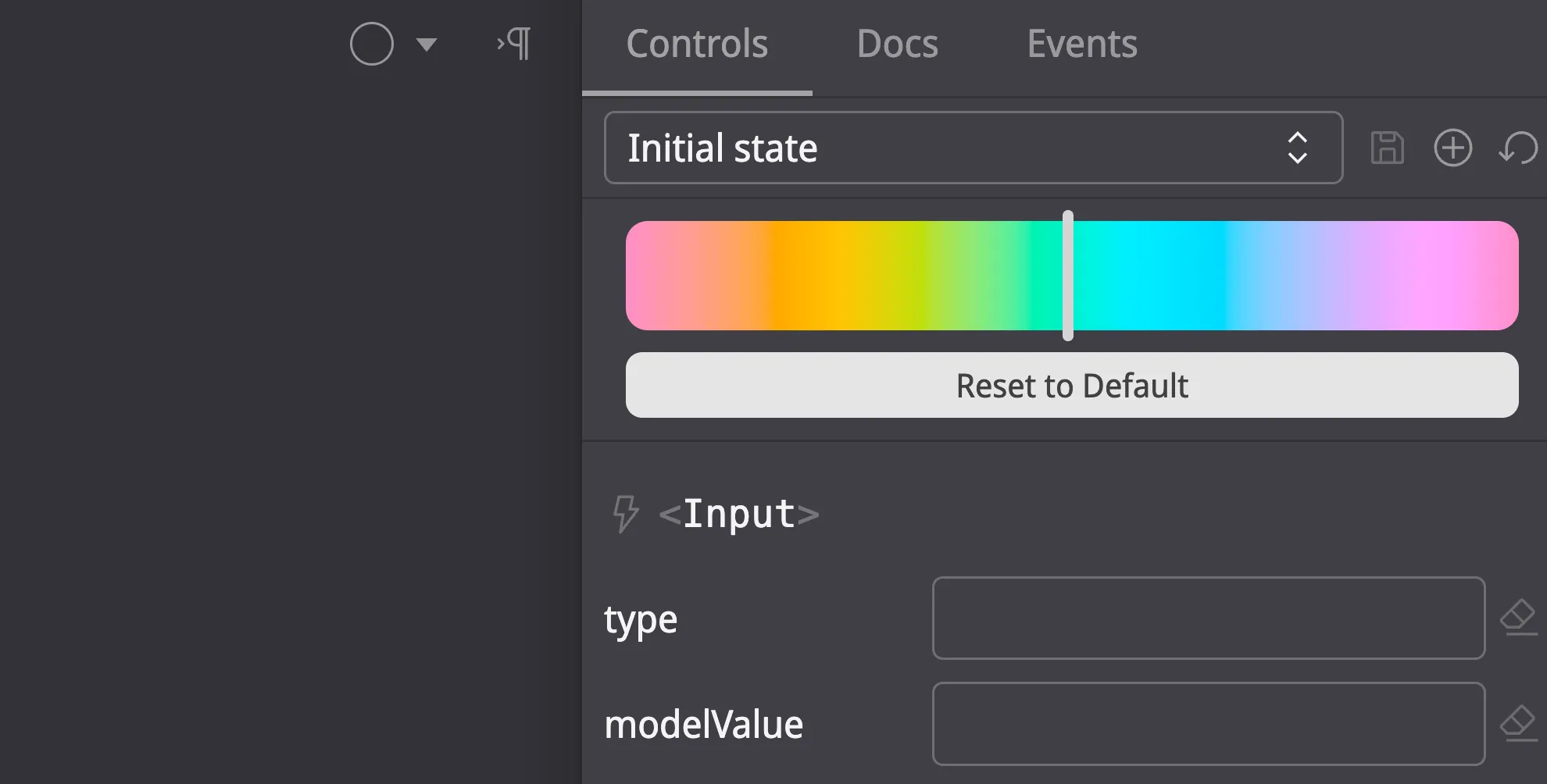

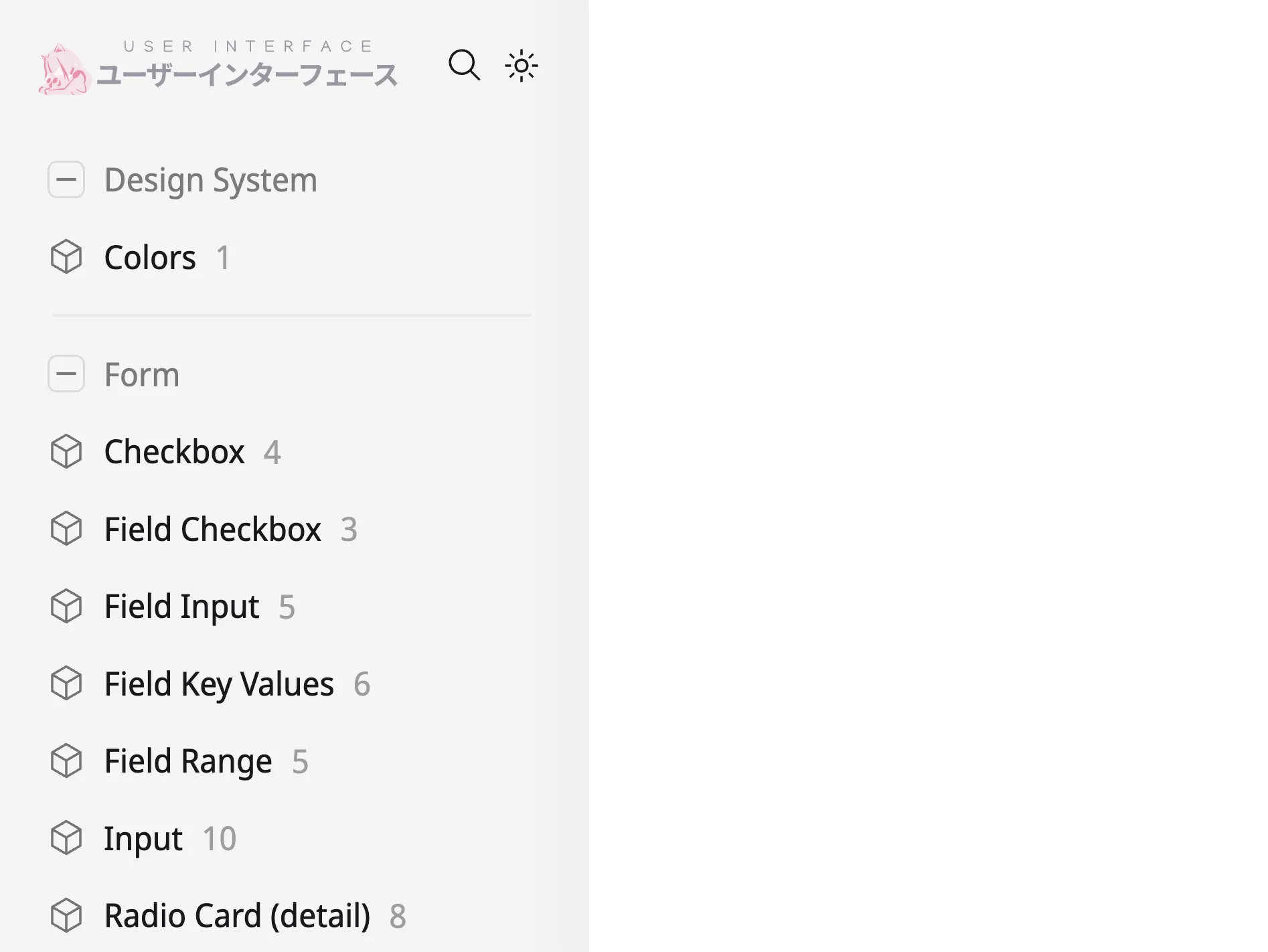

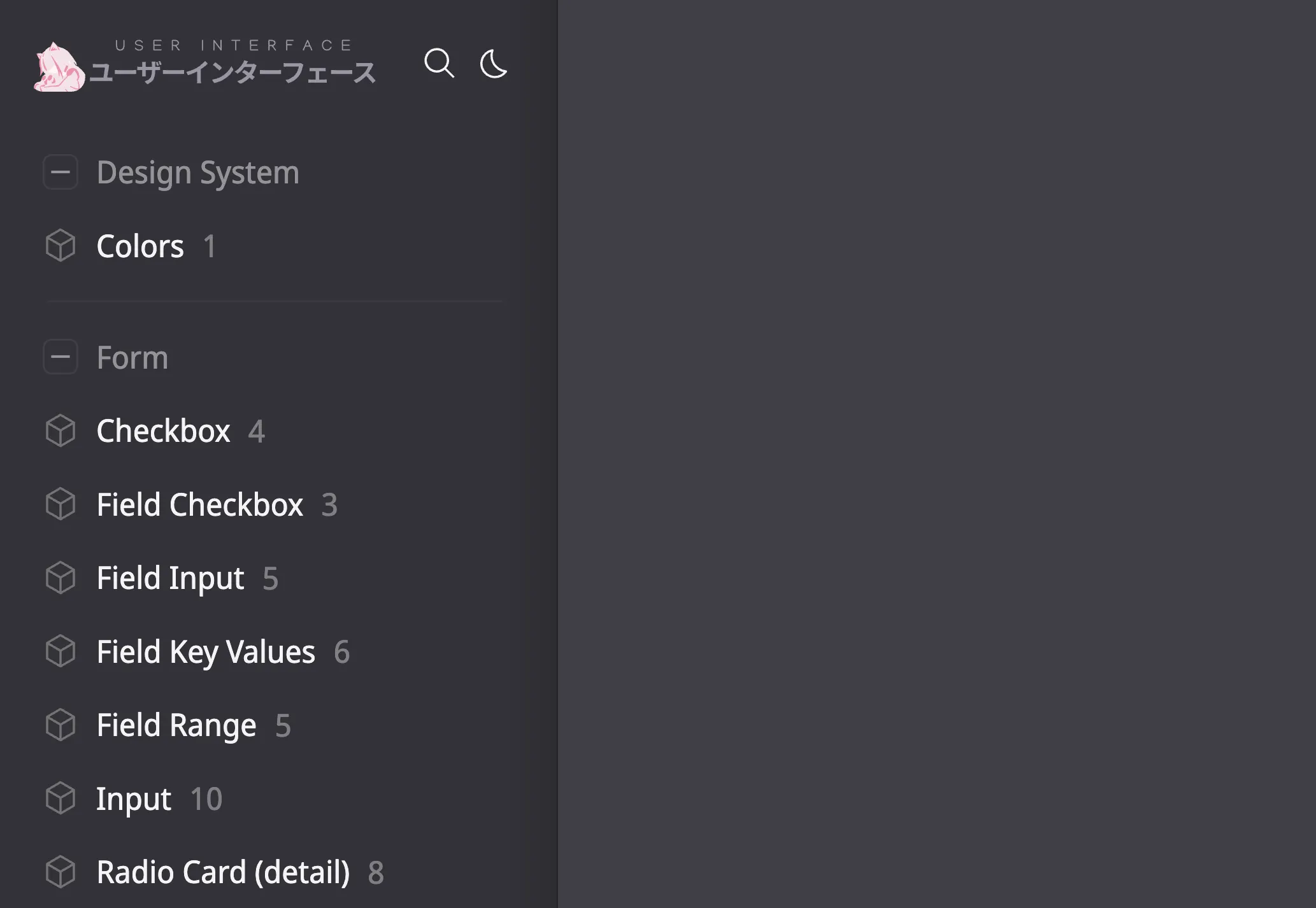

Here’s the first look that @sumimakito recorded once done:

The entire OKLCH color palette can spread on to the canvas all at once for us to take as reference. But it wasn’t perfect to tryout the colors and have the same scheme of feelings of Project Airi’s theme, was it?

So I first re-implemented the color slider, which feels much more suitable:

This does make the slider a bit more professional.

The logo and the default greenish color can be replaced to align the theme of Airi, that’s why I designed another dedicated logo for the UI page:

Oh, right, the entire UI component has been deployed to Netlify as usual

under the path /ui/, feel free to take a look at it if you ever wondered

how does the UI elements look like:

https://airi.moeru.ai/ui/

There are tons of other features that we cannot cover in this DevLog entirely:

- Supported for all of the LLM providers.

- Improved the animation and transition of menu navigation UI.

- Improved the spacing of the fields, new form!

- Component for (almost all the todo components on the Roadmap)

- Form

- Radio

- Radio Group

- Model Catalog

- Range

- Input

- Key Value Input

- Data Gui

- Range

- Menu

- Menu Item

- Menu Status Item

- Graphics

- 3D

- Physics

- Cursor Momentum

- And more…

- Form

We did some other experiment over the momentum and 3D too.

Check this out:

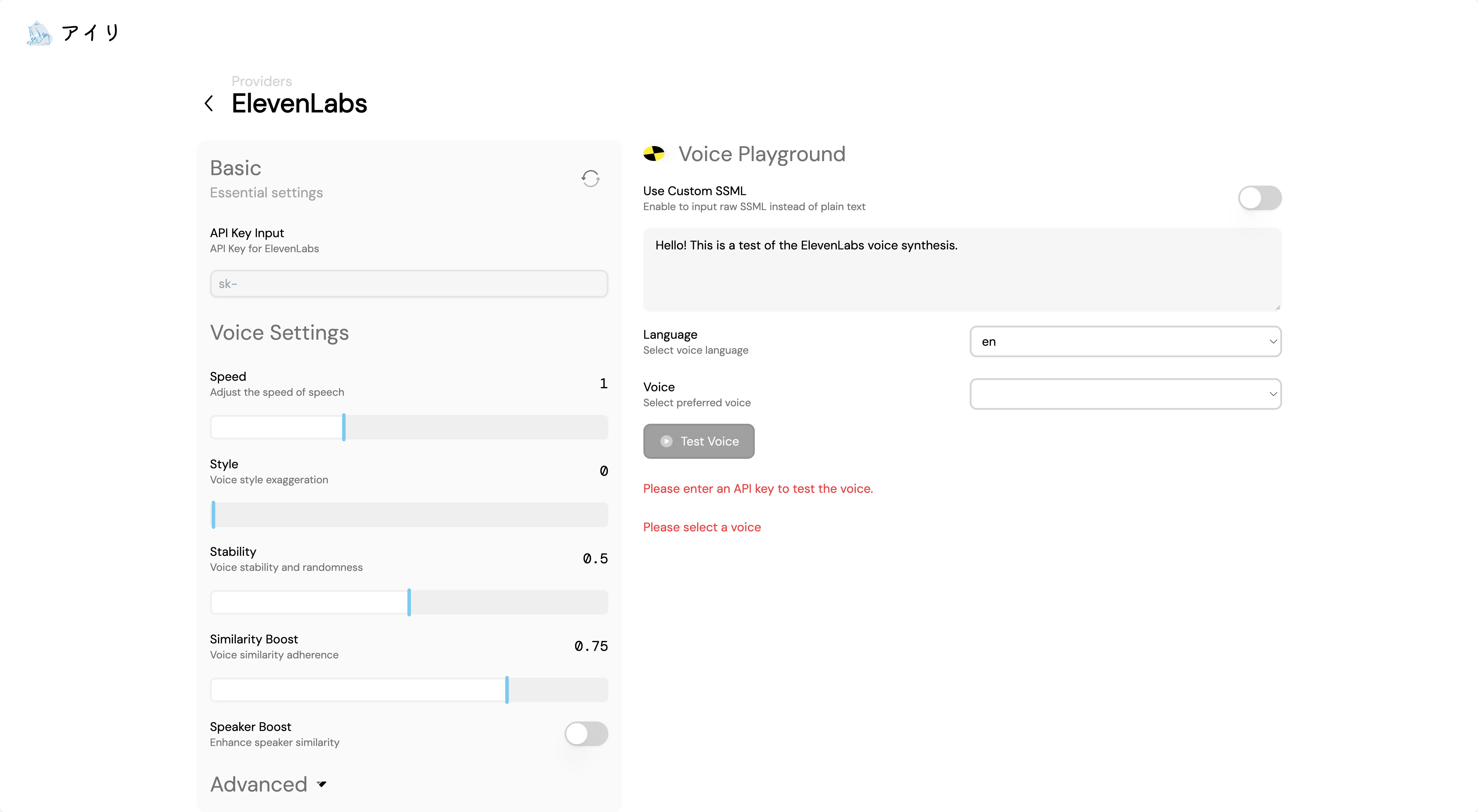

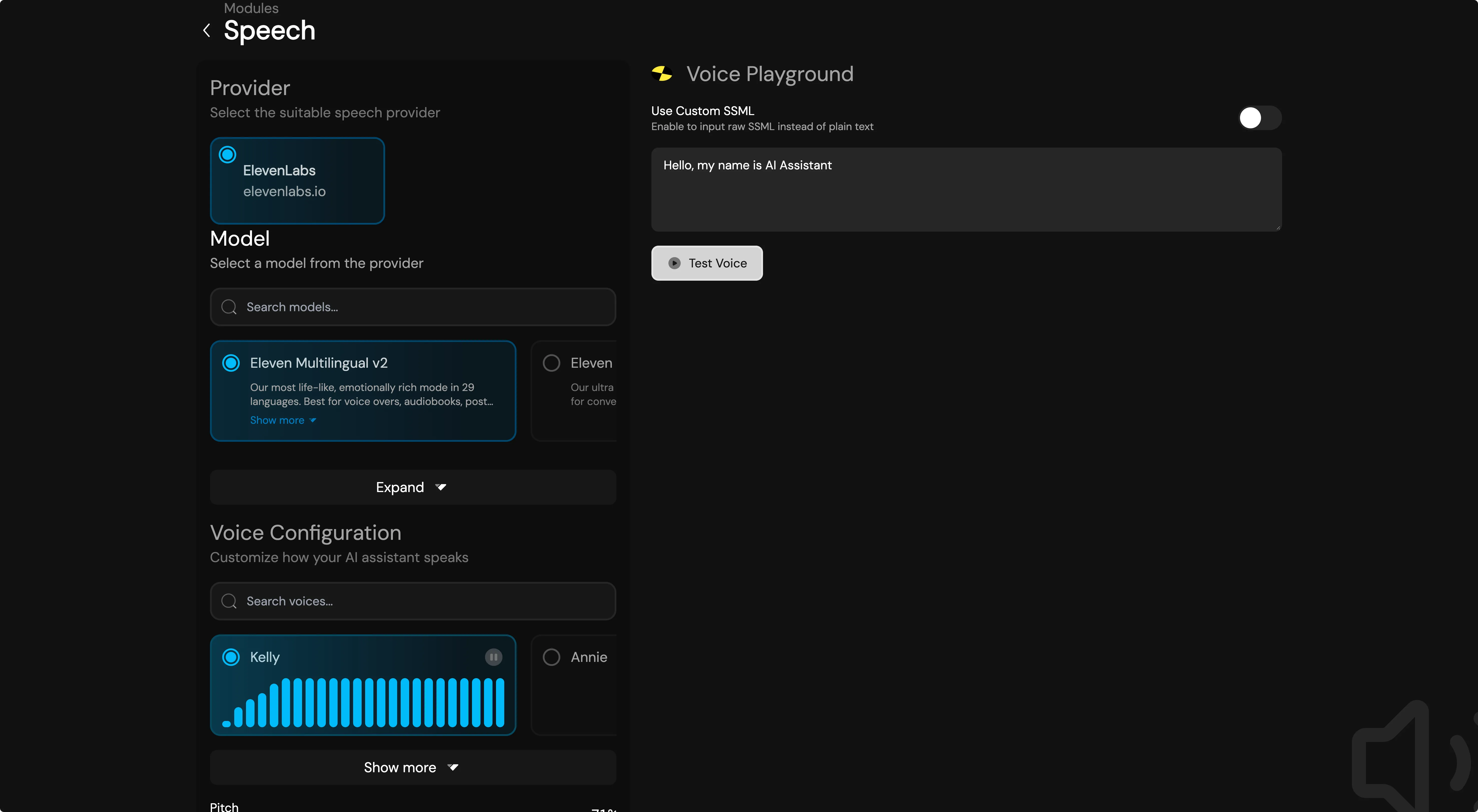

We finally supported speech models configurations 🎉! (Previously was

only capable to configure for ElevenLabs) Since the

new v0.1.2 version

of another amazing project we were working on called unspeech, it’s possible

to request the Microsoft Speech service (a.k.a. Azure AI Speech service, or

Cognitive Speech service) through

@xsai/generate-speech, which

means we finally got a OpenAI API compatible TTS for Microsoft.

But why was this so important to support?

It’s because that for the very first version of Neuro-sama, the text-to-speech

service was powered by Microsoft, with the voice named Ashley, along with a

+20% of pitch, you can get the same voice as Neuro-sama’s first version, try it

yourself:

Isn’t it the same, this is insane! That’s means, we can finally approach to what Neuro-sama can do with the new Speech ability!

1.382733

With all of those, we can get this result:

Nearly the same. But our story doesn’t end here, currently, we haven’t achieved memory, and better motion control, and the transcription settings UI was missing too. Hopefully we can get this done before the end of the month.

We are planning to have

- Memory Postgres + Vector

- Embedding settings UI

- Transcription settings UI

- Memory DuckDB WASM + Vector

- Motion embedding

- Speaches settings UI

That’s all for today’s DevLog, thank you to everyone that read the DevLog all the way down here.

I’ll see you all tomorrow.

El Psy Congroo.